AI and Ethics?

Photo by Nathan Dumlao on Unsplash

No seriously. Fuck...

What happens when you are ordained clergy, teach ethics as a spiritual practice, earn your living working in corporate America, and take a masterclass on AI Ethics?

If you are me -- you get really angry, frustrated, and depressed.

Spoiler alert (It's me, hi, I'm the problem, it's me.)

How can AI be Ethical when Business Isn't?

This is the key question I keep struggling with in class. I'm very pragmatic. I'm not an optimist. I'm not a pessimist. I fall into that subgenre known as "hopepunk." My work life is filled with mostly well-meaning people, but they are in no way incentivized to think about AI through an ethical lens. Beyond that -- business isn't.

Yeah, I know — late stage capitalism/early-stage technofeudalism and all.

The ethics as spiritual practice that I practice and teach is called mussar. It's a 1000-year-old Jewish approach to learning to be a decent human being. It’s all about balance. Imagine there are all these soul traits. None are inherently good are bad. All are necessary, and what matters is that they are in right relationship with each other and how you engage with the world.

Go with me for a minute here, I promise I’ll bring it back around to AI.

Anger is a soul trait. It’s neither good nor bad. When it’s in right relationship, it can fuel necessary change. When it’s out of balance it can destroy everything, including our relationships and ourselves.

Mussar is also very behavior focused. It’s a do it until you feel it kind of practice. Not fake it until you make it but figure out how good people act and try it out until it feels natural. The minute it feels comfortable means that it is time to check your choices and reach for more challenging levels of “good.” And I’m Jewish, so good is not about lack of “sin.” In Hebrew the word is tov (טוב) and Hebrew is so high context when you are engaging with it spiritual that I had to write an entire post about the meaning of tov to process it.

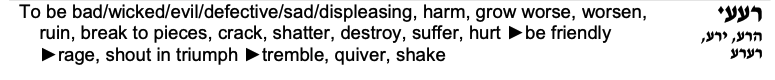

The way AI is being developed and rolled out is the opposite of tov. It’s rah (רע). Simple definition: bad, wicked, not good. But when you look at the root letters that connect all words with these root letters (resh ayin ayin) it helps understanding exactly what the problem is.

From Shoroshim; the book of roots.

From Shoroshim; the book of roots.

AI, as it’s being created and implemented now by most major companies, is harmful, worsen things, ruins the environment and entry level opportunities, and hurts humans and the world around us. There are real benefits, but not when it’s being promoted to help us reduce our ability to think critically, write even the most basic email, and create nude deepfakes.

The ethical constructs the London School of Economics is asking us to use to think about the ethics of AI tell us that there is no ethical use of AI. And we haven’t even gotten to the copyright issues in training LLMs yet.

In our last session we explored AI through the lens of distributive justice:”

Distributive justice = the fair and equitable distribution of socially and morally important goods

The first problem is that corporate America (and much of the world) doesn’t function on this premise. Even all people don’t agree that this is conceptually the goal. Then if you do, which I do, when you start diving into the WHAT and HOW – there is no ethical, equitable way to roll out AI in the current visions. No matter how we turned that stone over, there were risks that seemed deeply unacceptable especially in the global south and in the poorest areas every other region.

If everything is simply profit driven, we put ourselves out of existence. There are real benefits to science in AI. There are real benefits to large data analysis from AI. I even think there are some benefits to creativity and the arts from AI when applied by the artists and creatives. But how can we justify the negative impacts to people and the environment (which also affects people) by rolling LLMs out en masse to help us do things like write an email. In a recent add for Google Gemini I saw it was promoted to help someone decide what time to have a barbeque.

There’s article after article about how Apple is getting AI wrong. But based on any ethical framework they have been getting it right. That is until they started integrating ChatGPT. Siri was basic (PSL basic) but did tasks that made our lives a bit easier without putting too much strain on the grid or replacing entry level jobs.

So back to mussar as an ethical frame. When we talk about the ethics of AI, or people DON’T, how do we put our anger to work? What is the most generous choice we could make? What about humility – which is defined by Alan Morinis as “no more than my place, no less than my space.” How much unnecessary space is AI taking up? What is worth getting upset about and what isn’t? What about patience? Why is there this rush? Why are we so afraid of missing out – joy of missing out is also a soul trait. What and who are our responsibility? What about the leaders and business titans – what are their responsibilities? Is it really just to make as much money as possible?

For AI to have a chance of being ethically rolled out at scale, investors, the media, the stock market, tech titans, and all business leaders need to adopt new metrics of success. This is the only way that good choices can be made when it comes to developing and deploying AI and whatever the next shiny new tech idea will be.

Learn from the past, to live in the present for the sake of the future.

⬆️ That's the mantra.⬆️

⬇️ Here's the Mission.⬇️

Create new companies that do this.

Empower people within organizations fighting for this.

Call out those IN power that are not.

Reward media that engages in this way.

Ignore those that don't.

Make benefiting society a core measure of personal and corporate success.

And define "benefiting society" as one where resources are fairly, equally, and equitably distributed.